Most database administrators are at least to some extent familiar with what memory does for SQL server. This is especially true for scenarios such as configuring minimum memory in maximum memory, checkpoint, lazy writer etc. However, there is a shocking lack of understanding how the hardware itself plays a key role in the memory utilisation of the server. Ask any database administrator the difference between Single, Dual and Quad channel memory or even the Impact of memory speeds & over clocking when coupled with the motherboard and CPU and most likely you’re going to get a blank expression. Add to this the degradation of performance when not using twinned RAM or even understanding how the channels work and you’re looking at a very powerful server that has been configured poorly that it doesn’t really manage memory effectively. Most DBAs might be aware to some extent of features such as NUMA but may not be aware of how it plays a role at the hardware level. I remember there was a time when I used talk about 32 bit versus 64 bit OS and how address channels worked and the way the OS actually addressed memory. Today with features like in-memory OLTP, hybrid buffer pool and PMEM devices this confusion is starting to become even more problematic. While a large number of developers and administrators may not need to be aware of this simply because they would be using cloud resources the fact remains that organisations continue to use dedicated hardware and as such need to know how server configuration and hardware choices impact their build and long terms server utilization.

ECC Memory

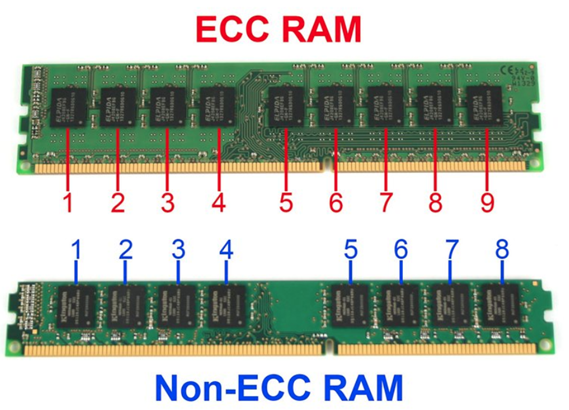

Let us start with ECC or Error Correcting CHIP memory. This is a no brainer as far as memory configuration for SQL Server databases are concerned. Error correcting memory as its name suggest has one additional chip to store checksum values for data being stored in the ram. Similar to how a RAID 5 configuration supports error correction at the disc level. The below diagram shows a ram module with ECC and another one without ECC

As more and more features within the SQL server database engine rely on memory, having error correcting memory is really not a choice anymore. It is slightly more expensive (as must be expected) and the performance is pretty much the same as that of non-error correcting memory. A lot of the time the corruption that happens in the disc is a result of corruption that originated in the ram and having ECC memory reduces the probability that bad data was written from the ram to the disc. The advantage of having this feature is that DBCC CHECKDB is far less likely to return corrupted pages as a result of bad memory. Data that is stored in in-memory tables also benefit from the additional protection provided at the RAM level.

Memory Speed

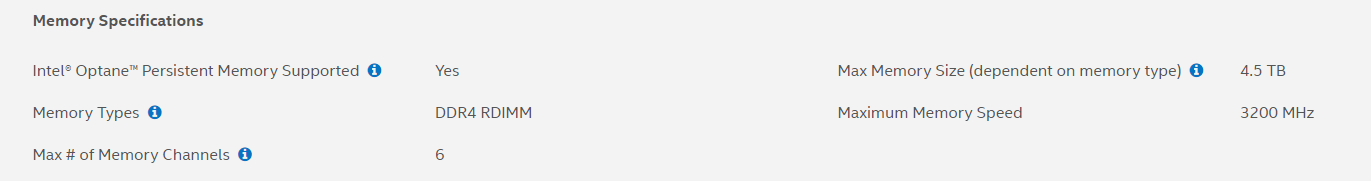

Below is the spec for the Intel Platinum Xenon 8380HL processor.

As you can see from the screenshot below the CPU also comes with memory specifications. In this case you can see for a high-end Intel platinum Xenon processor the maximum memory speed is 3200 megahertz. The speed of the memory obviously affects the latency and transfer rates when accessing data off the ram higher speeds mean lower latency but also increased cost as well as power consumption. It is important to understand that adding very high capacity and high-speed RAM will not yield any benefit if the underlying motherboard and processor do not support those speeds. This is referred to as over clocking and often implemented in gaming and graphic design systems. It might be tempting to go for very high speed memory to really boost system performance but keep in mind that sustained speed can over heat the RAM module and result in corruption and errors creeping in. High end RAM manufactures often attach heatsinks to the RAM to help counter this issue but isn’t recommended for a database server.

Memory channels

Almost all modern processors and motherboards support at least dual channel memory. You can identify the number of channels by looking at the specification on the motherboard, CPUs also have information regarding how many channels they would be able to support however the determining factor would usually be the motherboard. Typically you will find a dual channel motherboard having 4 memory slots with two different colours similar to the one shown below.

Slots that have the same colour belong to the same channel. When installing ram into the server it is important to ensure that there is a balance and that both channels are being used especially if you have multiple ram modules. Keep in mind that all the modules have to be from the same brand with the same speed and the same capacity any mismatch in this will result in certain motherboards switching to single channel mode. It is generally considered a best practise to buy ram modules in pairs to ensure that they are manufactured as close or as similar to each other as possible and therefore can be twinned. This ensures consistent performance when accessing data from the twinned modules.

Say for example you need to install 16 GB of RAM to a server you could potentially just buy one module of 16GB capacity or break it down into two modules of 8GB each. In such cases it would be beneficial to actually use two modules even though it might mean consuming additional slots. Let us now explore why this is good.

Difference between DUAL Channel and Single Channel RAM

A key point to keep in mind when purchasing memory for your server is to check the number of channels available to it. As mentioned earlier most servers today support at least dual channel and in other cases even quad channel. A channel is basically the pathway through which the RAM communicates to the memory controller. The memory controller essentially coordinates accessing RAM and transferring data to the cache and CPU. A single channel memory as its name indicates has only one set of address lines between the memory controller and the ram modules and therefore would have a slower data transfer rate. Dual channel on the other hand would have double the number of pathways and therefore double the bandwidth. The best way to visualise this is to imagine a single lane road where the speed limit is 100 kilometres per hour versus a 2 lane road with the speed limit 50 kilometres per hour. In either case the number of cars that could transfer between any given points would be roughly the same but you can obviously see the benefits of having multiple lanes when there is a pileup. Coupled with the fact that since you have multiple lanes it is always possible for you at a later stage to increase the speed (upgrade the RAM to one with higher speed) if needed however in a single lane it is not possible to add an additional Lane once you have reached the limits of the RAM module.

DDR4 vs DDR 5

Next, we would need to talk about DDR 4 versus DDR5. Again, this is fairly straight forward because it’s an industry standard. Modern servers typically are running on DDR 4. DDR 5 was announced in 2020 and future RAM modules are most likely going to DDR5. This means that you will need to keep this change in mind when you are procuring a new server because RAM modules are not interchangeable between generations. What this means is a DDR 4 module will not fit in a DDR 5 slot and vice versa. The 5th generation DDR promises lower power consumption and double the transfer speeds which means that for DDR4 while we topped out at about 3.5 GB per second in DDR 5 it’ll be close to about 6. This means faster transfer rates and therefore more throughput from your RAM modules and could be a very important factor for in-memory OLTP and other memory related operations. Coupled with the topics mentioned earlier the combination would mean extremely efficient data transfer from the RAM to the cache and therefore to the CPU. We saw spurt of improvement in SSD and NVME tech in the last few decades and are poised to see a similar change in RAM tech as well. This means the DBA needs to be familiar with the upcoming changes to ensure the server doesn’t become outdated a few short years after purchase.

So how do you choose?

Always prefer ECC over others, then prefer DDR 5 over DDR 4, then dual channel over single channel and higher speeds over lower. Then verify if the Motherboard and the CPU will support that configuration and tune accordingly.

Need more general tips?

https://docs.microsoft.com/en-us/windows-server/administration/performance-tuning/hardware/

Please consider subscribing !