A common requirement I have is to generate test data. Sometimes for trainings and sometimes for consulting work. As you may have seen from many previous video and blogs typically use Airlines Ontime dataset for large databases and Mockaroo for smaller datasets. However recently I wanted to try and see if I could achieve comparable performance to the SSIS world record and in order to be able to measure comparatively it was decided to perform the test using the same dataset as the one for the ETL World Record.

A direct quote from the website mentioned the DBGEn tool from the TPC-H benchmark so it was decided to use the same.

DBGEN tool from the TPC-H benchmark was used to generate 1.18 TB of source data.

In this blog I show how you can use the same tool to generate Terabytes of data as needed.

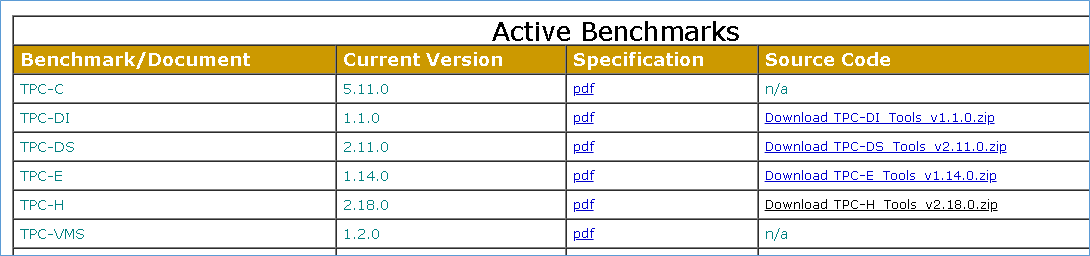

The first step is to visit the TPC.org website download link and download the solutions for compiling the required binaries. As you can see below we went for the TPC H which is the benchmark for data warehouse you can also use the TPC –E (newer version compared to TPC-C)

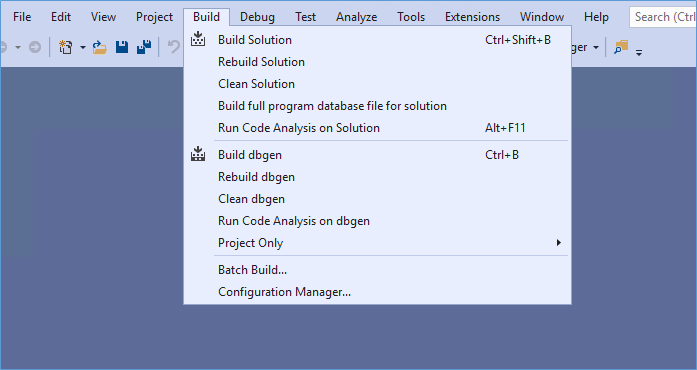

Once downloaded you can use Visual Studio to open the solution and build the project. I used the option Build full program database file for solution since I kept getting errors after the upgrade to Visual Studio 2019.

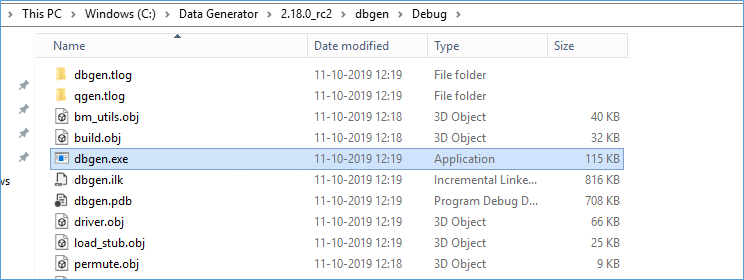

Notice that there is no need to make any changes as such simply build the project. After which in the Debug Folder you will get the dbgen.exe application file. As shown below.

You need to copy this file one level up (in my case into the folder dbgen) since running the application from this location throws error because of missing dependent files.

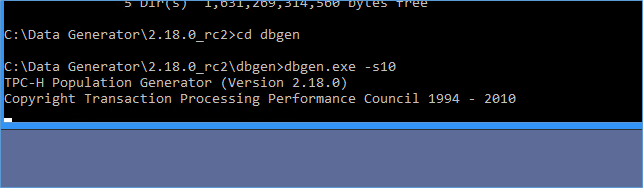

Once copied one level up you can open command prompt in Administrator mode and simple run the application file as it is. This generates 1 GB worth of data.

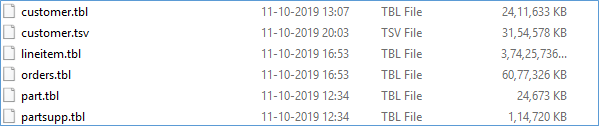

As you can see from the above screenshot I have added a switch to generate 10 GB of data hence the –s10 after calling the application. The end result will look like below

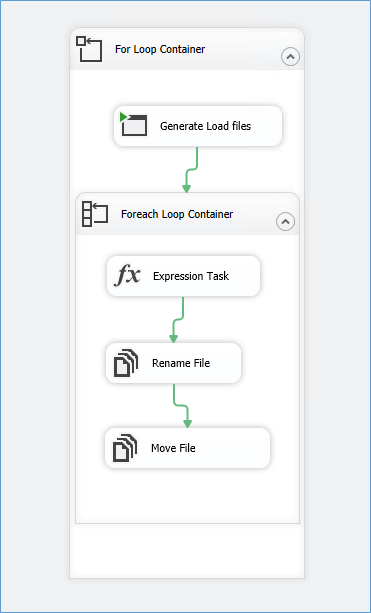

You will get multiple files each of which is pretty huge but representative of what a real world OMS would look like. For my experiment I wanted not one single file per a table and so I ended up creating a simple SSIS package that would create around 50 files for each table. As shown below.

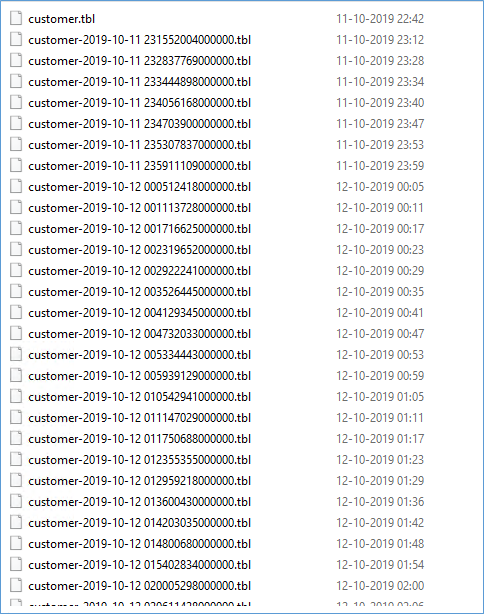

Because it kept asking to overwrite files I had to add additional logic to move files to a new folder with a new naming convention resulting in.

Now that I have the data the next step was to load the data into the underlying tables and so I needed object creation script which I have mentioned below for SQL Server.

USE [tpch] GO /****** Object: Table [dbo].[CUSTOMER] Script Date: 15-10-2019 08:59:41 ******/

SET ANSI_NULLS ON GO

SET QUOTED_IDENTIFIER ON GO

CREATE TABLE [dbo].[CUSTOMER] (

[C_CUSTKEY] [int] NOT NULL

,[C_NAME] [varchar](25) NULL

,[C_ADDRESS] [varchar](40) NULL

,[C_NATIONKEY] [bigint] NOT NULL

,[C_PHONE] [char](15) NULL

,[C_ACCTBAL] [decimal](18, 0) NULL

,[C_MKTSEGMENT] [char](10) NULL

,[C_COMMENT] [varchar](117) NULL

) ON [PRIMARY] GO /****** Object: Table [dbo].[LINEITEM] Script Date: 15-10-2019 08:59:41 ******/

SET ANSI_NULLS ON GO

SET QUOTED_IDENTIFIER ON GO

CREATE TABLE [dbo].[LINEITEM] (

[L_ORDERKEY] [bigint] NOT NULL

,[L_PARTKEY] [bigint] NOT NULL

,[L_SUPPKEY] [bigint] NOT NULL

,[L_LINENUMBER] [int] NOT NULL

,[L_QUANTITY] [decimal](18, 0) NULL

,[L_EXTENDEDPRICE] [decimal](18, 0) NULL

,[L_DISCOUNT] [decimal](18, 0) NULL

,[L_TAX] [decimal](18, 0) NULL

,[L_RETURNFLAG] [char](1) NULL

,[L_LINESTATUS] [char](1) NULL

,[L_SHIPDATE] [date] NULL

,[L_COMMITDATE] [date] NULL

,[L_RECEIPTDATE] [date] NULL

,[L_SHIPINSTRUCT] [char](25) NULL

,[L_SHIPMODE] [char](10) NULL

,[L_COMMENT] [varchar](44) NULL

,PRIMARY KEY CLUSTERED (

[L_ORDERKEY] ASC

,[L_LINENUMBER] ASC

) WITH (

PAD_INDEX = OFF

,STATISTICS_NORECOMPUTE = OFF

,IGNORE_DUP_KEY = OFF

,ALLOW_ROW_LOCKS = ON

,ALLOW_PAGE_LOCKS = ON

,OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF

) ON [PRIMARY]

) ON [PRIMARY] GO /****** Object: Table [dbo].[NATION] Script Date: 15-10-2019 08:59:41 ******/

SET ANSI_NULLS ON GO

SET QUOTED_IDENTIFIER ON GO

CREATE TABLE [dbo].[NATION] (

[N_NATIONKEY] [int] NOT NULL

,[N_NAME] [char](25) NULL

,[N_REGIONKEY] [bigint] NOT NULL

,[N_COMMENT] [varchar](152) NULL

,PRIMARY KEY CLUSTERED ([N_NATIONKEY] ASC) WITH (

PAD_INDEX = OFF

,STATISTICS_NORECOMPUTE = OFF

,IGNORE_DUP_KEY = OFF

,ALLOW_ROW_LOCKS = ON

,ALLOW_PAGE_LOCKS = ON

,OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF

) ON [PRIMARY]

) ON [PRIMARY] GO /****** Object: Table [dbo].[ORDERS] Script Date: 15-10-2019 08:59:41 ******/

SET ANSI_NULLS ON GO

SET QUOTED_IDENTIFIER ON GO

CREATE TABLE [dbo].[ORDERS] (

[O_ORDERKEY] [int] NOT NULL

,[O_CUSTKEY] [bigint] NOT NULL

,[O_ORDERSTATUS] [char](1) NULL

,[O_TOTALPRICE] [decimal](18, 0) NULL

,[O_ORDERDATE] [date] NULL

,[O_ORDERPRIORITY] [char](15) NULL

,[O_CLERK] [char](15) NULL

,[O_SHIPPRIORITY] [int] NULL

,[O_COMMENT] [varchar](79) NULL

,PRIMARY KEY CLUSTERED ([O_ORDERKEY] ASC) WITH (

PAD_INDEX = OFF

,STATISTICS_NORECOMPUTE = OFF

,IGNORE_DUP_KEY = OFF

,ALLOW_ROW_LOCKS = ON

,ALLOW_PAGE_LOCKS = ON

,OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF

) ON [PRIMARY]

) ON [PRIMARY] GO /****** Object: Table [dbo].[PART] Script Date: 15-10-2019 08:59:41 ******/

SET ANSI_NULLS ON GO

SET QUOTED_IDENTIFIER ON GO

CREATE TABLE [dbo].[PART] (

[P_PARTKEY] [int] NOT NULL

,[P_NAME] [varchar](55) NULL

,[P_MFGR] [char](25) NULL

,[P_BRAND] [char](10) NULL

,[P_TYPE] [varchar](25) NULL

,[P_SIZE] [int] NULL

,[P_CONTAINER] [char](10) NULL

,[P_RETAILPRICE] [decimal](18, 0) NULL

,[P_COMMENT] [varchar](23) NULL

,PRIMARY KEY CLUSTERED ([P_PARTKEY] ASC) WITH (

PAD_INDEX = OFF

,STATISTICS_NORECOMPUTE = OFF

,IGNORE_DUP_KEY = OFF

,ALLOW_ROW_LOCKS = ON

,ALLOW_PAGE_LOCKS = ON

,OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF

) ON [PRIMARY]

) ON [PRIMARY] GO /****** Object: Table [dbo].[PARTSUPP] Script Date: 15-10-2019 08:59:41 ******/

SET ANSI_NULLS ON GO

SET QUOTED_IDENTIFIER ON GO

CREATE TABLE [dbo].[PARTSUPP] (

[PS_PARTKEY] [bigint] NOT NULL

,[PS_SUPPKEY] [bigint] NOT NULL

,[PS_AVAILQTY] [int] NULL

,[PS_SUPPLYCOST] [decimal](18, 0) NULL

,[PS_COMMENT] [varchar](199) NULL

,PRIMARY KEY CLUSTERED (

[PS_PARTKEY] ASC

,[PS_SUPPKEY] ASC

) WITH (

PAD_INDEX = OFF

,STATISTICS_NORECOMPUTE = OFF

,IGNORE_DUP_KEY = OFF

,ALLOW_ROW_LOCKS = ON

,ALLOW_PAGE_LOCKS = ON

,OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF

) ON [PRIMARY]

) ON [PRIMARY] GO /****** Object: Table [dbo].[REGION] Script Date: 15-10-2019 08:59:41 ******/

SET ANSI_NULLS ON GO

SET QUOTED_IDENTIFIER ON GO

CREATE TABLE [dbo].[REGION] (

[R_REGIONKEY] [int] NOT NULL

,[R_NAME] [char](25) NULL

,[R_COMMENT] [varchar](152) NULL

,PRIMARY KEY CLUSTERED ([R_REGIONKEY] ASC) WITH (

PAD_INDEX = OFF

,STATISTICS_NORECOMPUTE = OFF

,IGNORE_DUP_KEY = OFF

,ALLOW_ROW_LOCKS = ON

,ALLOW_PAGE_LOCKS = ON

,OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF

) ON [PRIMARY]

) ON [PRIMARY] GO /****** Object: Table [dbo].[SUPPLIER] Script Date: 15-10-2019 08:59:41 ******/

SET ANSI_NULLS ON GO

SET QUOTED_IDENTIFIER ON GO

CREATE TABLE [dbo].[SUPPLIER] (

[S_SUPPKEY] [int] NOT NULL

,[S_NAME] [char](25) NULL

,[S_ADDRESS] [varchar](40) NULL

,[S_NATIONKEY] [bigint] NOT NULL

,[S_PHONE] [char](15) NULL

,[S_ACCTBAL] [decimal](18, 0) NULL

,[S_COMMENT] [varchar](101) NULL

,PRIMARY KEY CLUSTERED ([S_SUPPKEY] ASC) WITH (

PAD_INDEX = OFF

,STATISTICS_NORECOMPUTE = OFF

,IGNORE_DUP_KEY = OFF

,ALLOW_ROW_LOCKS = ON

,ALLOW_PAGE_LOCKS = ON

,OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF

) ON [PRIMARY]

) ON [PRIMARY] GO

SUMMARY

And that’s all there is to generating TPC-H data. Regarding the ETL World Record without trying too hard I have been able to load 2GB of data in 45 sec using an Azure VM 8 Virtual Cores and 32 GB Ram with 4 Standard SSDS of 500 IOPS. I have achieved significant amount of parallelism reaching the MaxConcurrent Task limit for the Package as well as the number of Threads as well as using Delayed Durablity forced. In addition to the above I am also using manually calculated values for Rows per Buffer and Max Buffer size for the DFT. When starting off the limit was the Disk IOPS but after scaling that out into multiple DISK LUNS the limiting factor was the CPU counts. I could have used Scale out for SSIS to achieve the additional CPU resources and running each table’s package on a different piece of hardware but it was a POC and I think I got my answer. I also tried using inmemory oltp tables but there was no significant gain.

WHAT ABOUT THE WORLD RECORD?

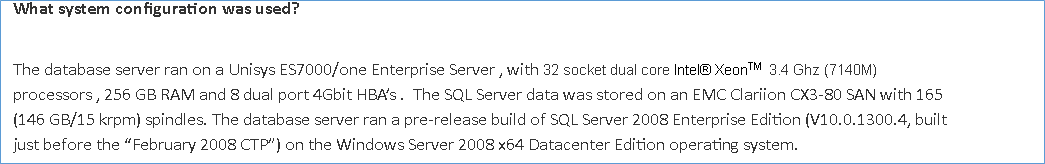

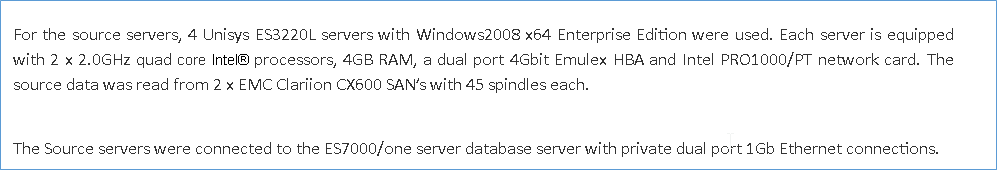

Considering the ETL world records configuration was pretty heavy (for the time) as mentioned below I think it pretty safe to say with the advent of the cloud and the improvements in database technologies the record stands on the whim of someone setting out to break it. I’d be happy to provided I was funded J

And there you have it. Using TPC-H to generate data.

Please Consider Subscribing