Last page contention is one of the textbook examples for latch contention. This frequently occurs on tables that are narrow and have high inserts happening against a sequential primary key column such as an identity column. Simply put on tables that have high concurrency you might have multiple users inserting data and generating some kind of order ID using the primary key column. Since multiple users are inserting in parallel we need to acquire an exclusive latch in the buffer to ensure that only one worker thread modifies the page in memory at a time. Because of the fact that only one worker can acquire a latch on the page recommend time any other workers have to wait until the latch has been released. An exclusive latch is incompatible with other latches and therefore this results in performance degradation due to this serial nature of the operation.

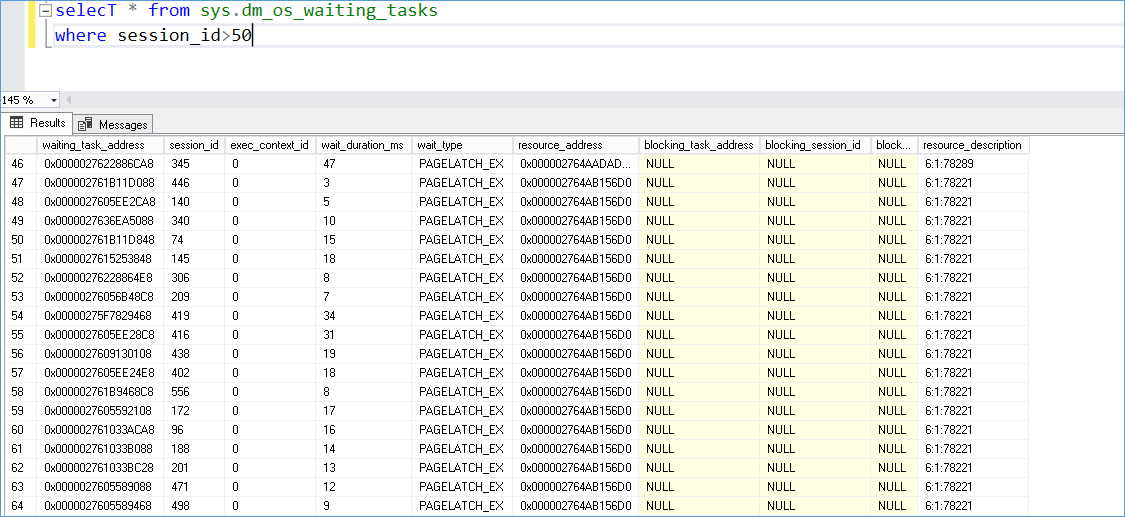

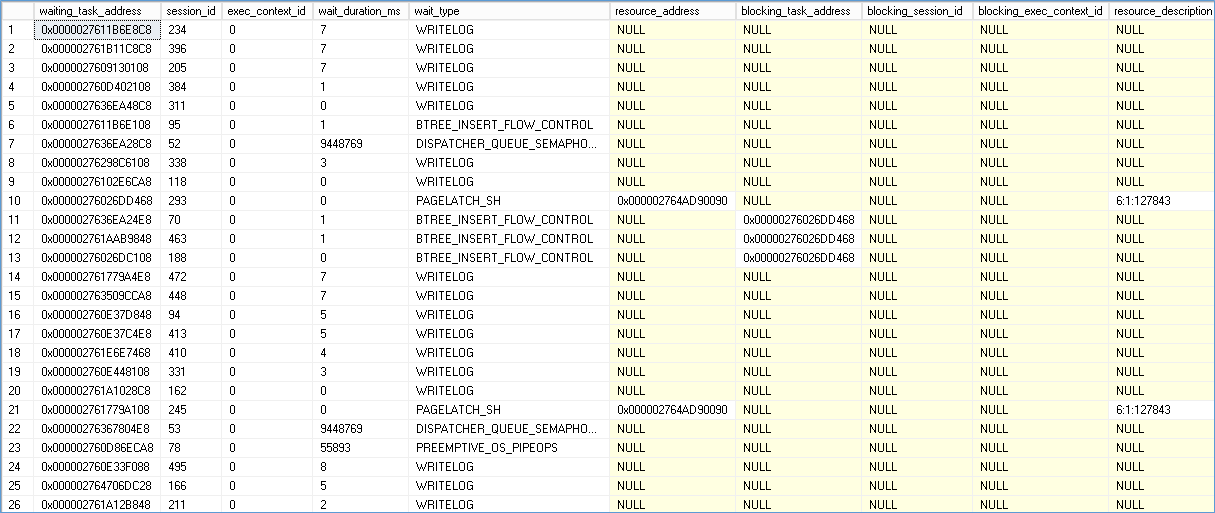

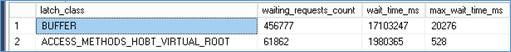

Please screenshot show what this might look like in a production environment.

In the above example we attempted to insert 500 rows per user for up to 500 users at a time. Resulting in 250000 inserts happening to the same table.

Starting in SQL 2019 we have the ability to optimize the Primary key clustered index to behave differently when dealing with SEQ keys thus avoiding contention. The syntax looks like below

ALTER TABLE [dbo].[latchcontentionseq] ADD PRIMARY KEY CLUSTERED ( [id] ASC)

WITH (

PAD_INDEX = OFF

,STATISTICS_NORECOMPUTE = OFF

,SORT_IN_TEMPDB = OFF

,IGNORE_DUP_KEY = OFF

,ONLINE = OFF

,ALLOW_ROW_LOCKS = ON

,ALLOW_PAGE_LOCKS = ON

,OPTIMIZE_FOR_SEQUENTIAL_KEY = ON

) ON [PRIMARY]

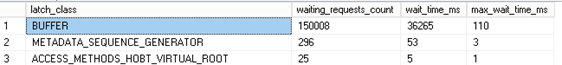

GOHaving made this change let’s rerun the query and measure the impact.

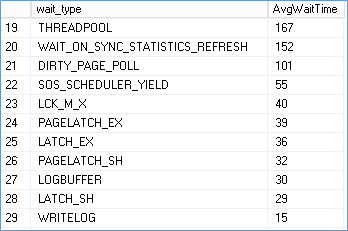

Comparison

BEFORE

OSTRESS exiting normally, elapsed time: 00:04:15.196

AFTER

OSTRESS exiting normally, elapsed time: 00:01:10.368

How is this achieved?

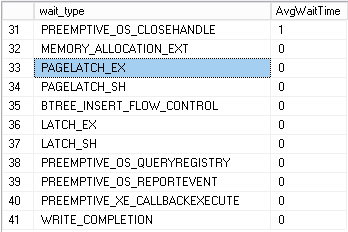

The core assumption on how this works has to do with the fact that not all waits are equal. If there are 500 inserts into a table let’s assume they all contribute equal wait times to the insert operation. In such cases there is really not scope for improvement and setting this option might actually degrade performance. However what is observed in the real world is out of 500 inserts say every 100th insert is significantly slower due to the fact that the page is full and it has request a new page. So we can assume insert 1-99 take .01 ms but insert 100 takes 1 ms. The excess wait results in a backlog as inserts 101 – 199 now get stuck behind insert 100. By using the new wait type BTREE_INSERT_FLOW_CONTROL preference is given to threads that will not cause such delays and therefore increase throughput or those threads. You could think of it like converting a single lane where buses and cars got stuck behind each other due to buses having slower speeds into two lanes one a dedicated bus lane and another a dedicated car lane. Thus allowing many more cars to pass through even if the buses are still slow.

Please Consider Subscribing